About half of recent immigrants in the United States have a four-year college degree, up from about a third before the Great Recession. Although this trend is encouraging, three recent studies from the Center show that higher levels of education among immigrants do not necessarily translate to higher levels of skill. First, despite their faster educational gains, recent immigrants have not improved relative to natives on measures of income, poverty, and welfare consumption. Second, college-educated immigrants are more likely than college-educated natives to hold low-skill jobs, especially when the immigrants are from Latin America. Third, immigrants with foreign college or advanced degrees score lower on tests of English literacy, numeracy, and computer operations compared to natives with the same level of education.

One explanation for the disconnect between immigrant education and immigrant skills could be that the standards for college degrees differ across sending countries. To support that theory, this new analysis illustrates the wide range of skills among college graduates who live in Organisation for Economic Co-operation and Development (OECD) countries.

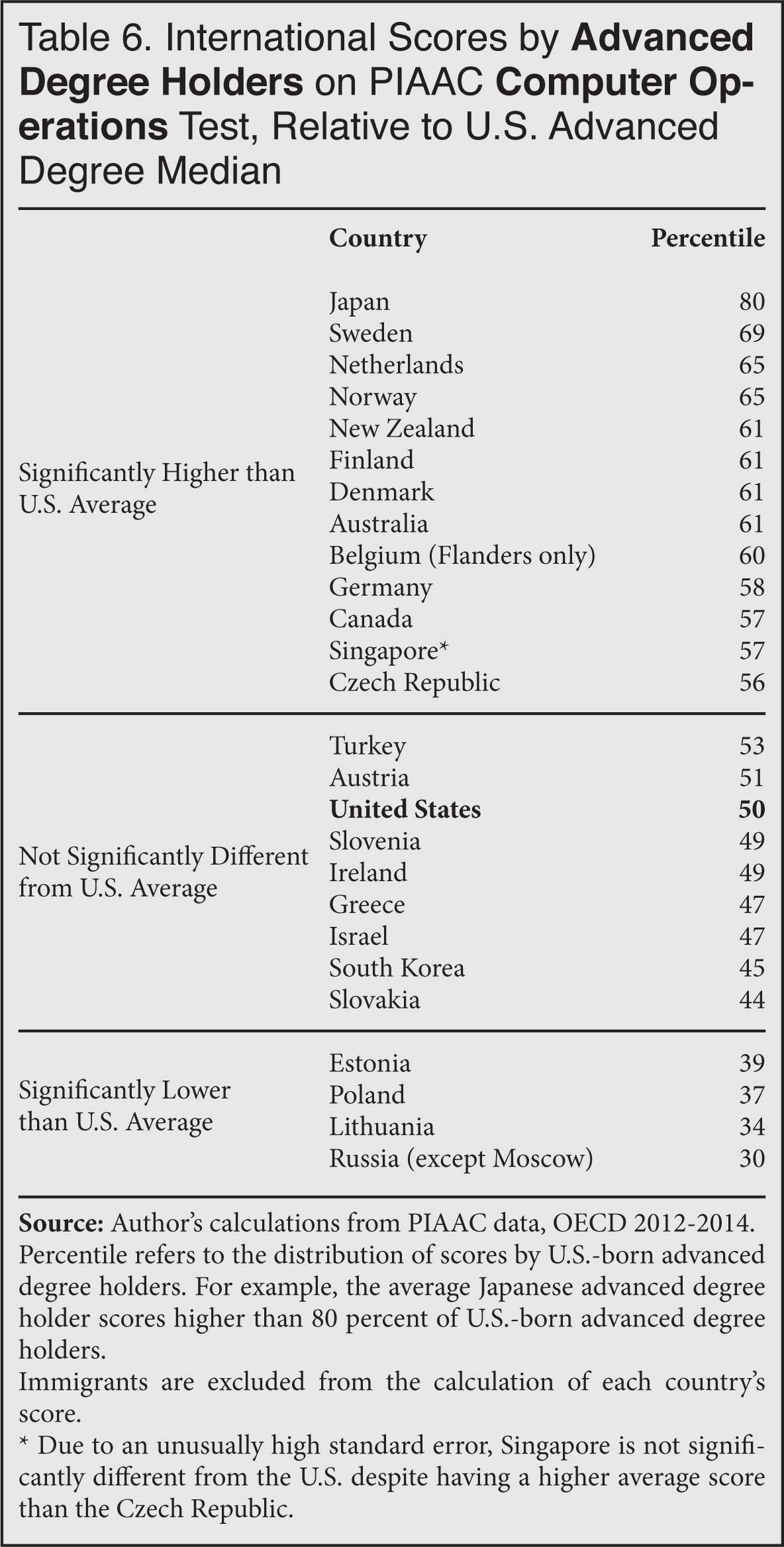

The results below come from the OECD's Program for the International Assessment of Adult Competencies (PIAAC) tests. They are the same literacy, numeracy, and computer operations tests used in the study of immigrants with foreign degrees linked above, but the test-takers are different. The foreign-degree study examined the test scores of immigrants in the United States. This analysis examines the test scores of people who live in other countries, with all immigrants excluded. In other words, the score for Japan reflects Japanese-born people who live in Japan, the score for Finland reflects Finnish-born people who live in Finland, and so on.

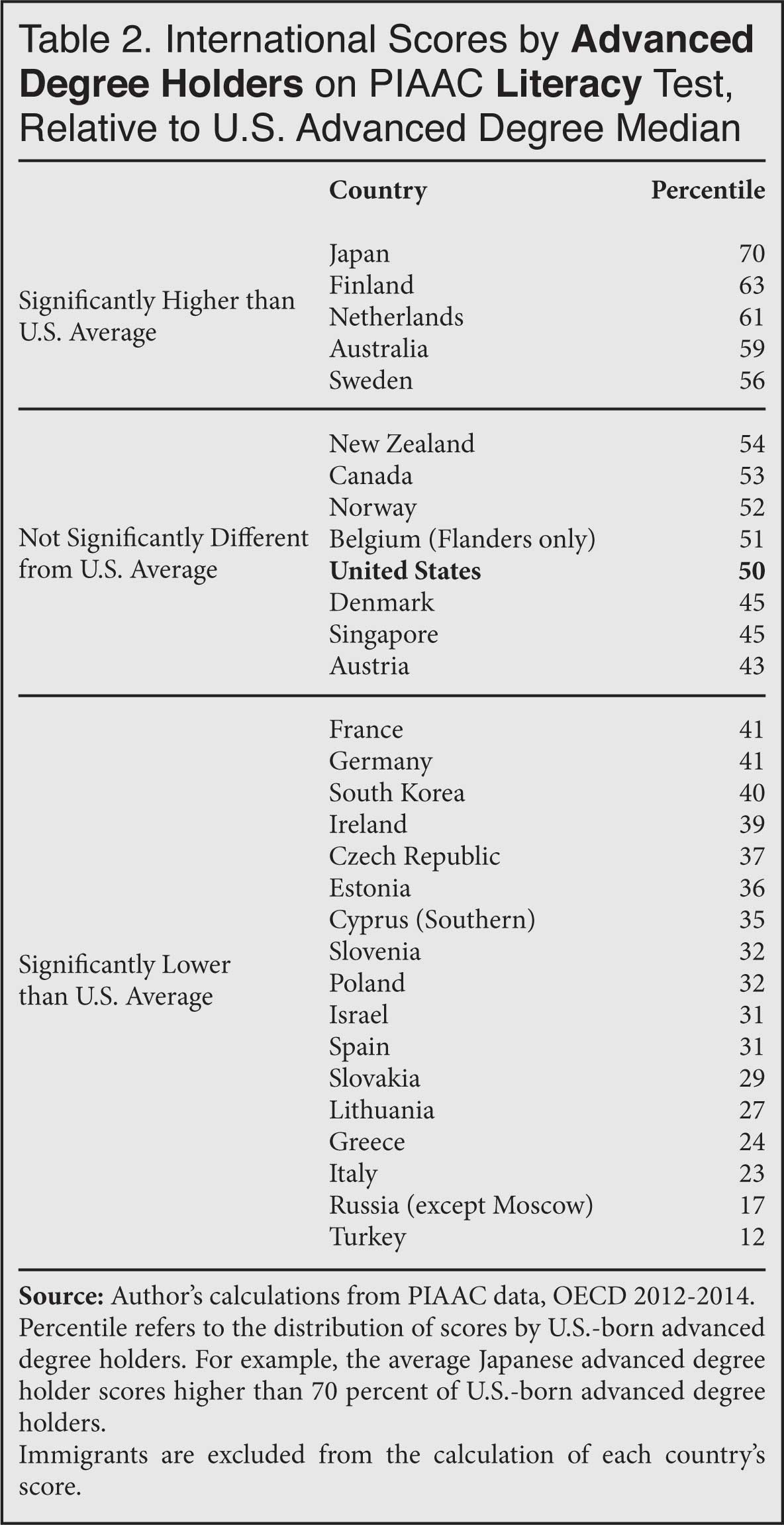

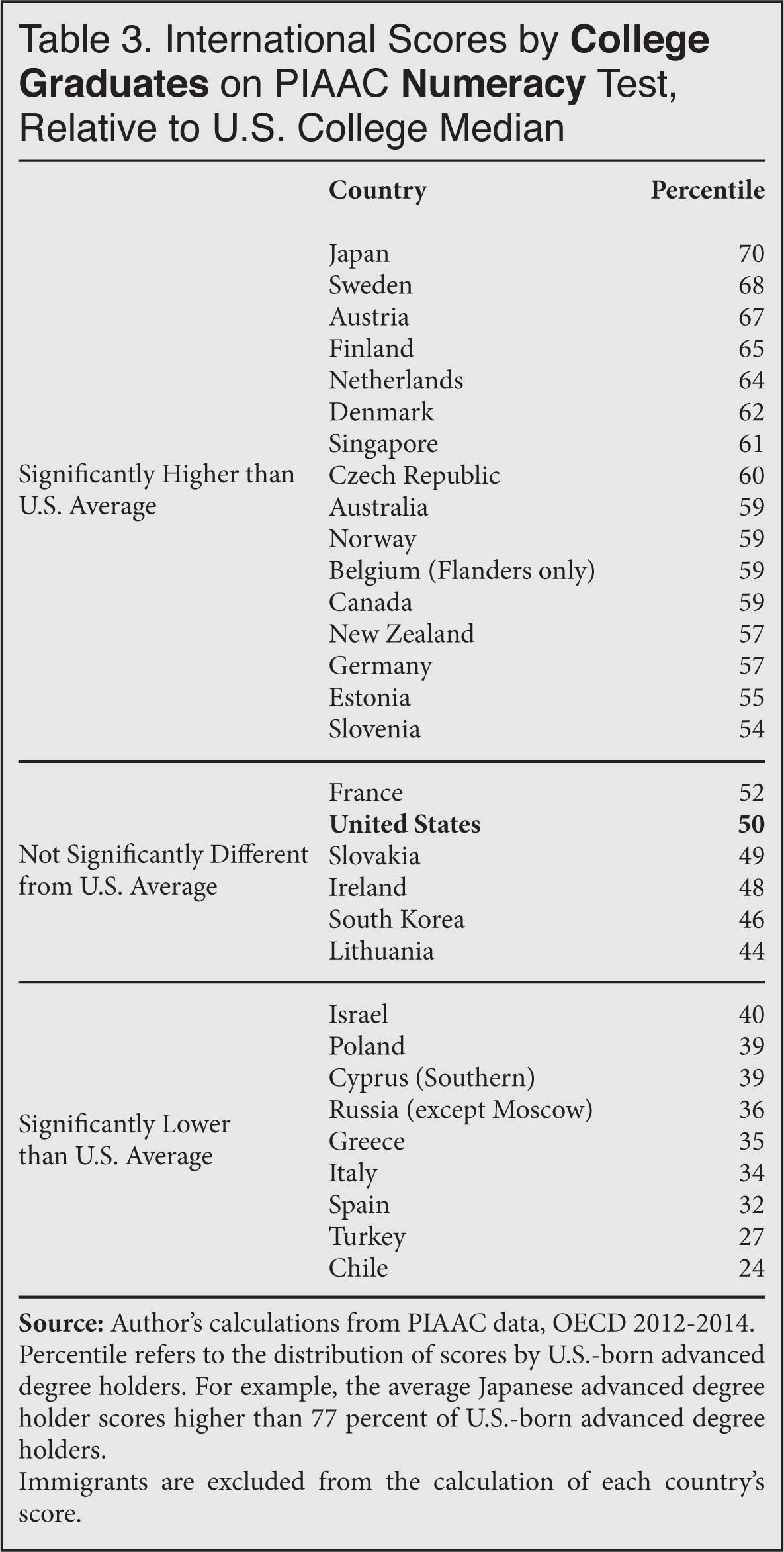

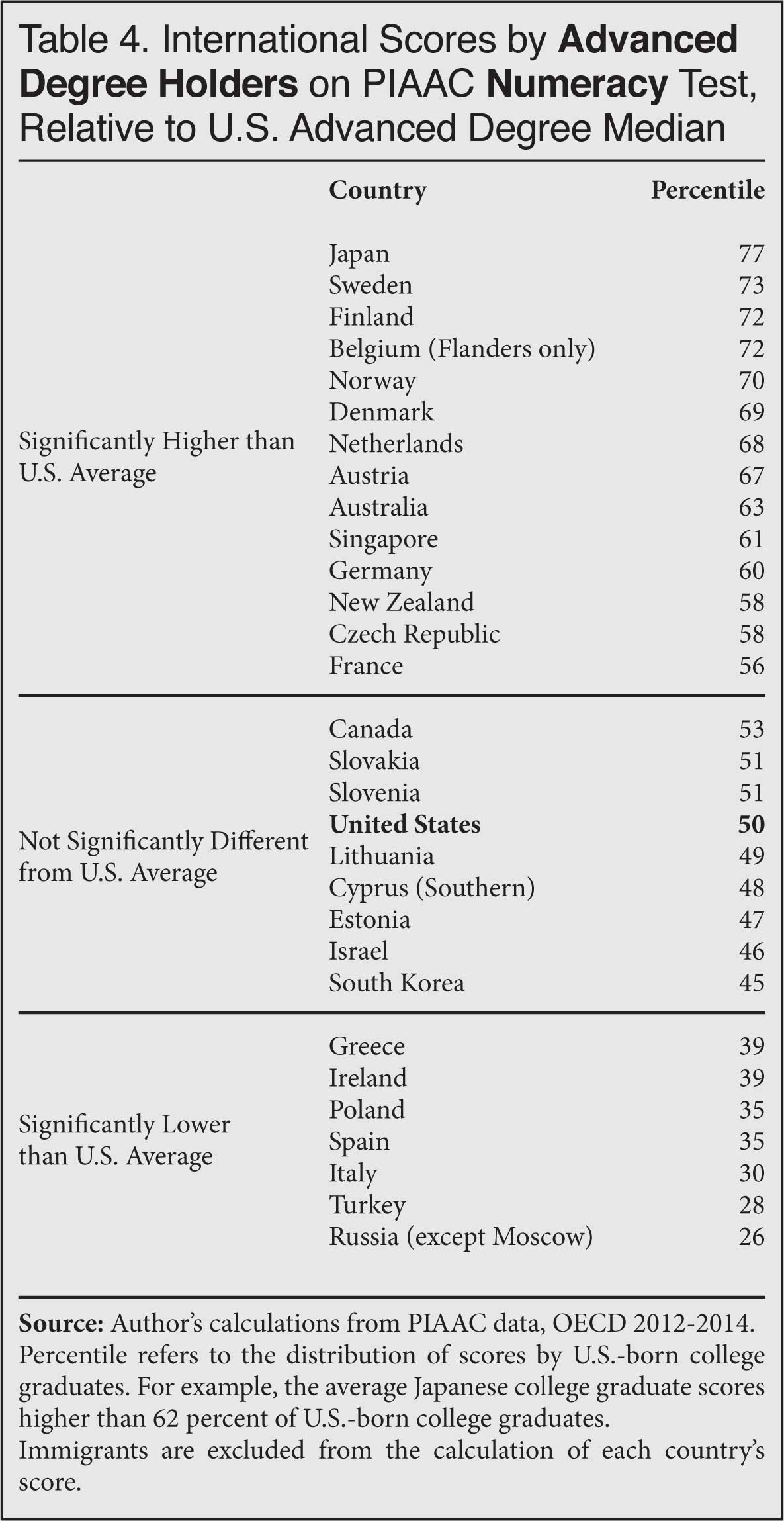

The scores are presented as percentiles on the U.S. distribution of college or advanced degree holders. For example, Table 1 shows that the average Japanese college graduate scores at the 62nd percentile of the U.S. distribution of literacy skill among college graduates, and Table 2 shows that the average Australian with an advanced degree scores at the 59th percentile of the U.S. distribution among advanced degree holders.

All tests were administered in each country's main language(s) to residents ages 16 to 65. For more detail on the tests and the underlying methodology for these calculations, see the foreign-degree study linked above. Also, as noted in the tables, some participating nations administered the tests only in a sub-region of their territory. Finally, the UK is excluded because its scores for college and advanced degree holders are not separable.

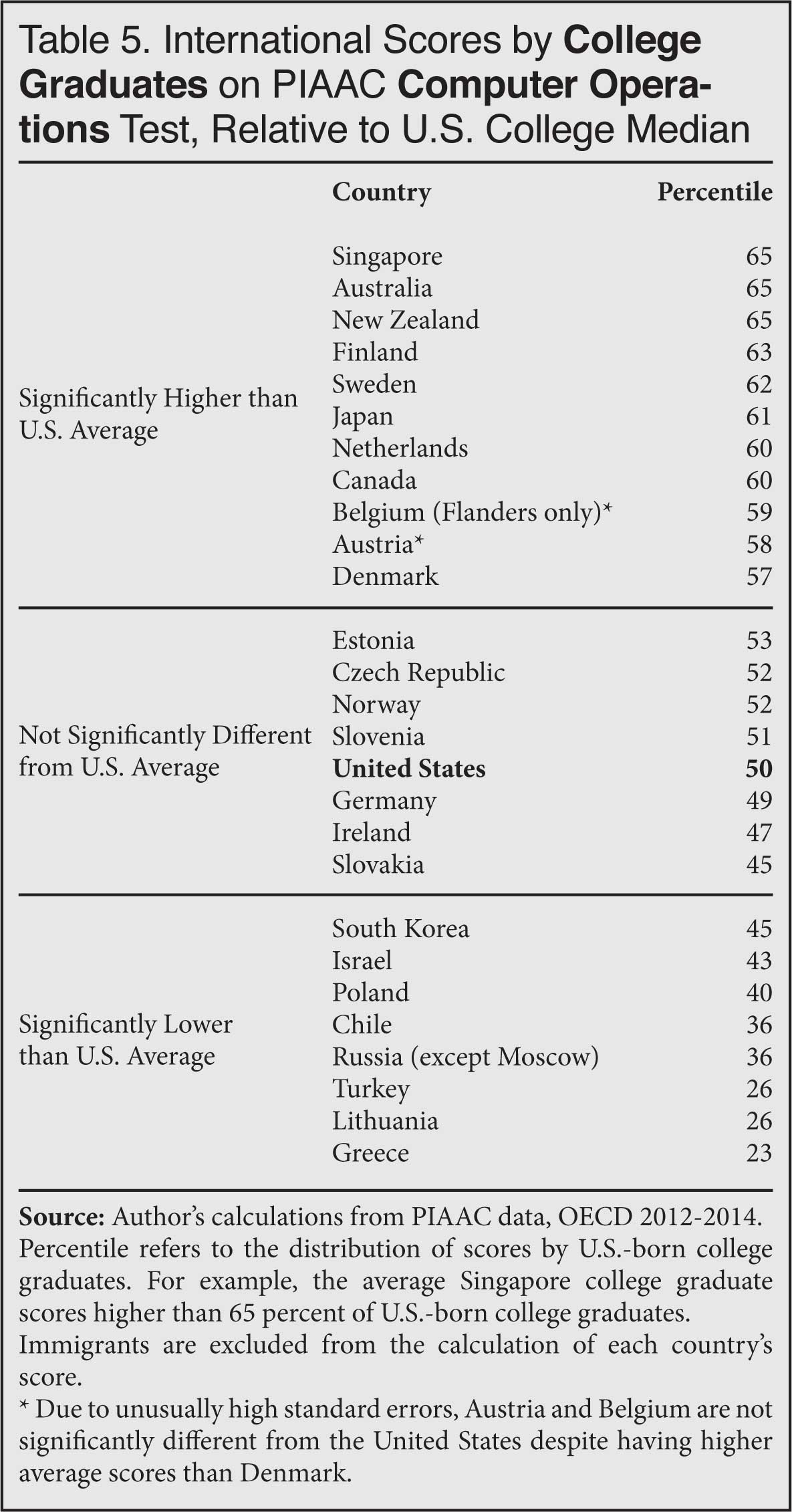

On all three tests, enormous variation exists across different countries among similarly educated people. For example, Table 3 indicates that the average college graduate in Japan scores better than 70 percent of American college graduates on the numeracy test, but the average college graduate in Italy scores better than just 34 percent of American college graduates. If we were to randomly select college graduates from Japan and Italy to come to the United States, the Japanese would apparently be far more skilled than the Italians on average, despite having the same level of education. Clearly, policymakers should not automatically assume that high levels of education reflect "high-skill" immigration.

Roughly speaking, and with some notable exceptions, college graduates in less developed OECD countries (as in Eastern Europe and the Mediterranean) tend to score lower than comparably educated people in the more developed areas (Northern Europe and parts of Asia). This tendency raises the concern that college graduates from poorer regions outside of the OECD may face a substantial skills deficit with their counterparts in the developed world. It also suggests that the high levels of education claimed by "diversity" immigrants, who come primarily from developing countries, should be regarded with some skepticism.

An important caveat is that international comparisons are valid only to the extent that data collection is consistent across countries. The OECD insists that countries administering the PIAAC had to "meet stringent standards relating to the target population, sample design, sample selection response rates, and non-response bias analysis." In addition, translations of the tests were "subject to strict guidelines, and to review and approval by the international consortium." Although response rates differed across countries, the OECD says that non-response bias is likely to be "low" or "minimal" in every nation. And in contrast to international student tests such as the PISA, there seems to be less global interest in PIAAC rankings, so countries have less incentive to tweak the testing procedures in their favor.

Despite the OECD's assurances, international comparisons are inherently less reliable than within-country comparisons, and readers should always interpret them cautiously. At the same time, the sheer magnitude of international skill disparities on display here — among people with supposedly the same level of education — is hard to ignore. Given that cross-country standards for college and advanced degrees appear to be so disparate, educational credentials are probably not a sufficient basis for a "high-skill" immigration system.

|

|

|

|

|

|